Read Dom Cooper’s latest article for SHP as he discusses complexity, and weighs the ideas of predictability and uncertainty for near-miss, accidents and incident investigations.

Recently in OSH, we’ve been informed accidents are emergences of complex systems (CS) that can’t be prevented. The notion we must simply accept emergences and manage the outcome as they’re unpredictable and unsolvable [i] , [ii] is termed complexity absorption[iii].

Recently in OSH, we’ve been informed accidents are emergences of complex systems (CS) that can’t be prevented. The notion we must simply accept emergences and manage the outcome as they’re unpredictable and unsolvable [i] , [ii] is termed complexity absorption[iii].

We’re also told current OSH risk assessment[iv] and accident investigation methods[v] are ill-equipped to deal with CS. Such views make it worthwhile to explore whether or not a CS is capable of being tamed and controlled.

What is complexity?

The Oxford English Dictionary defines complexity as:

- The quality or condition of being complex or;

- A complicated condition, a complication. In systems thinking, complex and complicated systems are two separate domains. The former is nonlinear (where effects are not proportional to their inputs), and the latter is linear (where effects have clear causes). It seems complexity cannot be strictly defined, only situated in the space between order and disorder [vi]. This speaks to why complexity is deemed ‘ambiguous, context-dependent and subjective’ [vii], meaning ‘complexity is in the eye of the beholder’.

This becomes apparent when it can be argued organisations (by definition) are ‘complicated’ linear entities that operate in wider ‘complex’ environments (e.g., aviation, construction, oil & gas, shipping) [viii], while contra arguments assert organisational systems can simultaneously be simple, complicated and complex [ix]. Knowing which is which is vital for preventing unwanted events.

What features compose a complex system?

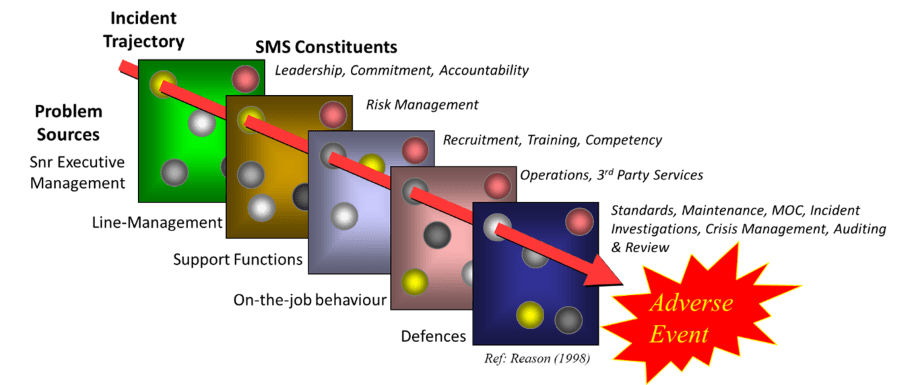

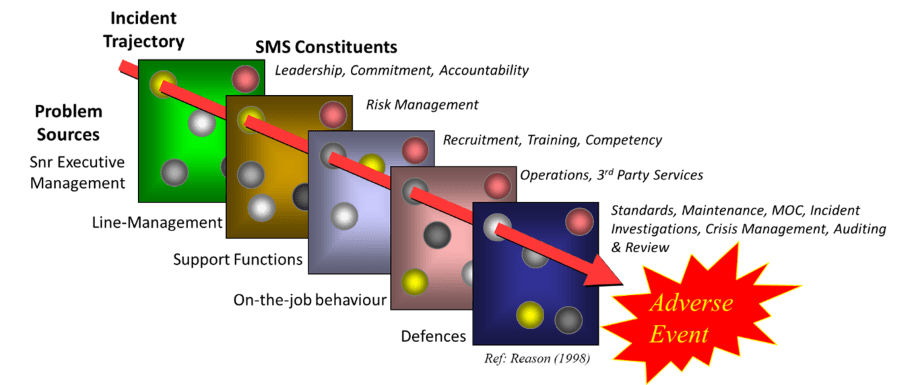

Figure 1: Interdependent constituents of an SMS aligned to an incident trajectory. (The holes at each layer represent problems that can align in multiple linear and nonlinear ways).

Michel Baranger, professor emeritus of physics, specified clear rules to facilitate identification of a CS [x]:

- A CS contains many constituents interacting nonlinearly. This might best be illustrated with a safety management system (SMS) comprising controlling elements and components related to senior management (leadership), line management (risk management), support functions (training), on-the-job behaviour (contractor management) and system defences (procedures, emergency management).

- The constituents of a CS are interdependent. Each layer of an SMS contains various nested and interdependent elements that all interact in some way at different levels and in different guises to create the entire system intended to manage risk. When the elements and components support each other, this interdependence is a source of strength; conflict or even the absence of support among them can be a source of weakness [xi].

- A CS always possesses a structure spanning several different, but interlinked, scales. Every scale has its own structure of constituents, hierarchically linked to each other, with coarser higher-level scales being dependent on lower, finer granular scales. SMS do not operate in a vacuum; they are one system among many comprising a broader system, in which there is a symphony of coordination and cooperation based upon a skeletal framework provided by the organisation’s structure, its strategy, its direction, its operations, its KPIs and its internal/external politics.

- A CS is capable of emerging behaviour. True CS have the ability to spontaneously self-organise themselves as they lack central control or authority governing their behaviour, [xii]e., they can self-create emergences (e.g., accidents) from the interactions between a scale’s constituents. Poor Management of Change (MOC) systems, for example, combined with poor application of MOC procedures that eliminated or overrode governing risk controls, led to many process safety disasters (e.g., Chernobyl, Flixborough, Texas City, Macondo) [xiii].

- Complexity involves an interplay between chaos and non-chaos (unordered and ordered conditions). Imperial Sugar’s 2008 dust explosion [xiv] where steel covers were installed on a belt conveyor system provides an example. Intentionally, these contained sugar dust (ordered). However, high concentrations of sugar dust also built up under the covers as they lacked explosion vents and dust-removal equipment. An unknown heat source (thought to be an overheated bearing in the steel belt conveyor) ignited the high dust concentration causing an initial explosion. Secondary explosions arose from disturbed accumulated sugar dust coating the floors and other structures (unordered). Parts of the building collapsed, 14 people died, 14 suffered life-changing injuries and 24 suffered lesser injuries.

- Complexity involves an interplay between cooperation and competition of the system’s scales. In OSH this could, for example, speak directly to the productivity–safety conflict within operations, where senior executives’ simultaneous need for cost-cutting and increased productivity is experienced within operations by inadequate resources, excessive production targets, and/or limited timeframes; BP’s Deepwater Horizon catastrophe is the classic case study.

Importantly, Baranger (p.11) [X] states, ‘in order to remain complex, all the previous 5 rules must obey Rule 6’. Thus, ‘Rule 6’ points to potential complexity reduction via the identification and control of any system linkages simultaneously featuring cooperation and competition.

Organisational systems meeting Baranger’s complexity rules

Try as I might, even with AI assistance, I cannot think of, or identify, an internal organisational management or production system that simultaneously meets all six of Baranger’s rules. That is, a complex system has to [1] be ungoverned or uncontrolled; [2] have constituents acting unpredictably; [3] possess a structure spanning several nested scales; [4] be capable of self-organising; [5] involve interplays between disorder and order; and [6] involve interplays between cooperation and competition at the connections between system scales.

Try as I might, even with AI assistance, I cannot think of, or identify, an internal organisational management or production system that simultaneously meets all six of Baranger’s rules. That is, a complex system has to [1] be ungoverned or uncontrolled; [2] have constituents acting unpredictably; [3] possess a structure spanning several nested scales; [4] be capable of self-organising; [5] involve interplays between disorder and order; and [6] involve interplays between cooperation and competition at the connections between system scales.

Even if one or more of the first five rules apply, without Rule 6 also being applicable, an organisational system is not complex. Thus, with a higher degree of clarity than hitherto for determining complexity, these rules lend power to the idea that an organisation is a ‘complicated’ entity that operates in complex environments [xv].

If true, complexity ‘emergences’ are not the problem in accident causation; accidents and incidents remain the result of foreseeable breakdowns in complicated systems, and, therefore, are predictable.

Lessons learned

The idea that CS are beyond the OSH profession’s capability to prevent accidents seems to be a form of ‘learned helplessness’ [xvi]. Genuine complex systems do contain ambiguity and uncertainty [xvii]; therefore, the understanding of any CS will always be incomplete. Moreover, their spontaneous ability to self-organise without any external controls or guidance, and diverge from initial conditions as they evolve, adds to uncertainty.

Applying Baranger’s six rules helps identify specific aspects of complexity, with ‘Rule 6’ being absolutely critical to exerting control. Logic dictates that if simultaneous features of cooperation and competition at a connection were eliminated, that part of the overall system could no longer be considered complex.

Existing near-miss, incident and investigation records, and workplace observations, are useful sources to identify any problematic inter-system linkages. Applying Rule 6 to those featuring both competition and cooperation (e.g., where unhealthy competition is present between managers working on the same project [xviii]) would allow appropriate constraints and controls to be constructed and enacted, to bring the wider system to order [xix].

References

[i] Hollnagel, E., 2004. Barriers and Accident Prevention. Aldershot, UK, Ashgate.

[ii] Dekker, S., Cilliers, P., & Hofmeyr, J. H. (2011). The complexity of failure: Implications of complexity theory for safety investigations. Safety science, 49(6), 939-945.

[iii] Sund Levander, M., & Tingström, P. (2020). Complicated versus complexity: when an old woman and her daughter meet the health care system. BMC Women’s Health, 20, 1-11.

[iv] Adriaensen, A., Decré, W., & Pintelon, L. (2019). Can complexity-thinking methods contribute to improving occupational safety in industry 4.0? A review of safety analysis methods and their concepts. Safety, 5(4), 65.

[v] Dien, Y., Dechy, N., & Guillaume, E. (2012). Accident investigation: From searching direct causes to finding in-depth causes–Problem of analysis or/and of analyst?. Safety science, 50(6), 1398-1407.

[vi] Heylighen, F. (2008). Complexity and self-organization. LAPLANTE, Phillip. Encyclopedia of Information Systems and Technology, 1, 250-259.

[vii] Kannampallil, T. G., Schauer, G. F., Cohen, T., & Patel, V. L. (2011). Considering complexity in healthcare systems. Journal of Biomedical Informatics, 44(6), 943-947.

[viii] Cooper, M. D. (2022). The emperor has no clothes: A critique of Safety-II. Safety Science, 152, 105047.

[ix] Mulgan, G. & Leadbeater, C. (2013). Systems innovation. London: Nesta.

[x] Baranger, M. (2000). Chaos, complexity, and entropy. New England Complex Systems Institute, Cambridge, 17.

[xi] Rotch, W. (1993). Management control systems: One view of components and their interdependence. British Journal of Management, 4(3), 191-203.

[xii] Poli, R. (2013). “A note on the difference between complicated and complex social systems.” Cadmus.

[xiii] IChemE (2022) E-Book: Learning Lessons from Major Incidents. https://www.icheme.org/media/20722/icheme-lessons-learned-database-rev-11.pdf

[xiv] Vorderbrueggen, J. B. (2011). Imperial sugar refinery combustible dust explosion investigation. Process Safety Progress, 30(1), 66-81.

[xv] Levy, D. L. (2000). Applications and limitations of complexity theory in organization theory and strategy. Public Administration and Public Policy, 79, 67-88.

[xvi] Ashforth, B. E. (1990). The organizationally induced helplessness syndrome: A preliminary model. Canadian Journal of Administrative Sciences/Revue Canadienne des Sciences de l’Administration, 7(3), 30-36.

[xvii] Wheatley, S. (2016). The emergence of new states in international law: The insights from complexity theory. Chinese Journal of International Law, 15(3), 579-606.

[xviii] Zebroski, E. L. (1991). Lessons learned from man-made catastrophes. Risk management: expanding horizons in nuclear power and other industries. New York: Hemisphere Publishing, 51-65.

[xix] Snowden, D., & Rancati, A. (2021). Managing complexity (and chaos) in times of crisis. A field guide for decision makers inspired by the Cynefin framework (No. JRC123629). Publications Office of the European Union.

What makes us susceptible to burnout?

In this episode of the Safety & Health Podcast, ‘Burnout, stress and being human’, Heather Beach is joined by Stacy Thomson to discuss burnout, perfectionism and how to deal with burnout as an individual, as management and as an organisation.

We provide an insight on how to tackle burnout and why mental health is such a taboo subject, particularly in the workplace.

Recently in OSH, we’ve been informed accidents are emergences of complex systems (CS) that can’t be prevented. The notion we must simply accept emergences and manage the outcome as they’re unpredictable and unsolvable

Recently in OSH, we’ve been informed accidents are emergences of complex systems (CS) that can’t be prevented. The notion we must simply accept emergences and manage the outcome as they’re unpredictable and unsolvable

Try as I might, even with AI assistance, I cannot think of, or identify, an internal organisational management or production system that simultaneously meets all six of Baranger’s rules. That is, a complex system has to [1] be ungoverned or uncontrolled; [2] have constituents acting unpredictably; [3] possess a structure spanning several nested scales; [4] be capable of self-organising; [5] involve interplays between disorder and order; and [6] involve interplays between cooperation and competition at the connections between system scales.

Try as I might, even with AI assistance, I cannot think of, or identify, an internal organisational management or production system that simultaneously meets all six of Baranger’s rules. That is, a complex system has to [1] be ungoverned or uncontrolled; [2] have constituents acting unpredictably; [3] possess a structure spanning several nested scales; [4] be capable of self-organising; [5] involve interplays between disorder and order; and [6] involve interplays between cooperation and competition at the connections between system scales.