Tim Marsh looks into Dave Snowden’s Cynefin model….

The legendary Heather Beach recently started a thread online that generated a huge number of responses. It asked about excellence in analysis and by far the most popular response from a variety of leading industry figures pointed at Dave Snowden and his Cynefin movement.

Cynefin turns out to be hugely influential, indeed so much so that I found it’s the most famous and used word in the Welsh language. Naturally, as a proud Welshman, I felt utterly duty bound to try and summarise the key thinking behind Dave Snowden’s model! (By the very nature of the task – simplifying something that stresses that simplifying complex issues is rarely helpful – I’m bound to fail so I can only hope any Cynefin experts will forgive what follows!)

Cynefin is pronounced Ker nev in and means ‘habitat or place’ or more specifically ‘the place of your multiple belongings’ and reflects the fact that we are the product of many influences not all of which we are consciously aware of.

What is Cynefin?

The first thing to note is that it is a sense making model not a categorisation model.

Typically, in classical two by two matrixes, the model precedes the data but in sense making models it’s the data that influences any categorisation. The importance of this distinction is highlighted by the problem that comes with any simple categorisation – it’ll be easy and quick to use but may well lacks subtlety. (Most readers will have winced at ‘pick a box, any box’ options and seen the box ‘human error’ ticked as the root cause of an incident in a simplistic check sheet – but as Sidney Dekker says ‘human error is never ever the root-cause it’s the start point to the investigation’).

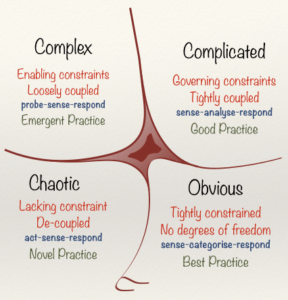

To walk through the four domains in turn:

Click on the image to enlarge.

Simple and Clear. Here the issues at hand will be predictable and ordered to any person of experience – so we can safely categorise them as such, apply best practise and monitor compliance. For example, you never want unsecure objects in a vehicle that’s rolling over so the toolbox and laptop in the boot and seat belts for passengers.

Complicated. Again, there is a right answer but here it won’t be self-evident, so we’ll need experts to analyse in more depth. It’s important to note the shift in focus here – we’re not primarily seeking to categorise were seeking to analyse. As such we don’t want to then be applying best practise we want to be thinking about a variety of good practises and the distinction is vital. In good practise there may be several different solutions to the same problem, and we’ll want to avoid ‘not invented here’ syndrome whilst maximising ownership and engagement. (Aubrey Daniels nailed this when he said ‘I’ll only impose my solution on you if it’s four times better than yours as I know you’ll work three times harder on your own”).

To continue the road safety theme – sometimes we’ll use a Pelican crossing but often we’ll rely instead on the ‘green cross code’. Ideally, we’ll use both simultaneously.

Complex. Distinguished from complicated by the fact that here we have Donald Rumsfeld’s infamous ‘unknown unknowns’. (With ‘complicated’ there are unknowns – but ones we know we don’t know so, for example, we call in a relevant expert). Here we first probe the situation with trial and error experimentation making sure things are set up in a way that the consequences of the experiments can’t get out of hand. Sense making emerges from the on-going analysis and data and in turn good practises come from this.

Andrew Hopkins mindful safety concept says that there are always problems and mindful organisations pro-actively seek them out rather than wait for the problem to find them. The NASA inquiries into the Challenger disaster illustrate the fluid nature of inquiry and risk perception. The engineers were really worried about seal erosion that caused the catastrophe before the launch but more senior managers stressed they were comfortable there were no problems as no one had told them there were any. (Followed by a notorious silence when challenged ‘and you didn’t think to ask?’)

Chaotic. Self-evident definition. In chaotic situations we must first try and establish some sort of order and control so we’re back to rules and process initially at least. There’s a working assumption that most organisations merely get their ‘complicated’ and ‘complex’ confused rather than being in chaos… but I imagine half of readers are thinking of organisations they know and are laughing bitterly at that observation!

Problems and learning points for the health and safety world

Since problems are unique in subtle ways the application of simplistic and linear thinking to a complex problem can lead to… well… expensive consequences!

Perhaps the most important element of the model is that the boundary between simple and chaotic should be best thought of as a cliff. The more we assume things are simple and under control (or more usually to assume things remain simple and under control) the more likely we are to get complacent and the more likely things are to drift towards the cliff edge then … drop over it. (The other boundaries allow a smoother and less fraught transitions).

An obvious problem, of course, is that we’re not always aware which space we’re operating in and we’ll often default to our preferred or habitual way of operating. (James Reason is worth quoting here. “If yesterday you successfully solved a problem with a hammer then tomorrow all problems are going to look like nails”).

Most typically, if you’re a process expert then you’ll tend to see all problems as a failure of process. This perennial issue is addressed directly by what is perhaps my most photographed slide at conferences over the years. It shows how once diminishing returns have set in from systems and procedures organisations must make a conscious decision to embrace a holistic, pro-active and humanistic approach or we’ll simply go around in ever decreasing circles and the infamous safety plateaus effect is so often seen.

It will (of course) often suit us to put things into the ‘simple’ category. Especially if we’re at all process obsessed, but we should minimise how often we do this (and check it remains appropriate regularly) as it’s the most vulnerable category of the four in many respects because of the ‘cliff’ risk.

Weak Signals

Seeing patterns in weak signals is something done really well by all of us with the benefit of hindsight so setting up methodologies that examine weak signals pro-actively is key. Engineers on Deepwater Horizon dismissing worrying readings and even more alarming ‘kicks’ as merely the result of the bladder effect (which doesn’t actually exist) is a classic example of conveniently rationalising signals that are telling us that something isn’t as planned. Poor culture survey scores, near miss incidents on a par (rather than outnumbering) minor incidents, the common use of ‘them’ rather than ‘we’ and poor housekeeping are other (not so) weak signals if you’re looking for them.

More useful perhaps is the default of people who work in complex and or chaotic areas (e.g. battlefield command, politicians) who will automatically get a team of experts together and collate as much information as they can in the hope that a solution will emerge. Here, any and all signals will be scrutinised for utility.

A practical application that all organisations should use pro-actively is such as Behavioural Root Cause Analysis teams containing the real experts (the people who do the job) are always successful if given the time and resource. The passion and engagement such teams can be seen also in the ‘safety differently’ approach – which is essentially to assume workers are the solution not the problem and to ask them ‘what do you need’?

Consultancy is another problem area. Bad consultants. “I have this lovely solution… where is your problem?’ Good consultants: ‘I have a range of lovely solutions and an open mind to developing an entirely unique and tailored one’… ‘what is your problem?’

Rules and Regulations

An interesting topical issue is that when things get chaotic the response of those in command is to establish order with new rules and regulations which they often find themselves reluctant to give up when things calm down. (The concerns about the proposed ‘Justice Bill’ in the UK is a perfect example). In the world of health and safety we can consider simplistic catch all PPE rules that really should be made more subtle once the principle is established. Specifically, once rules that were initially useful to add order to chaos have achieved their aim they can look and feel ‘infantile’ to workers. This inevitably leads to resentment and sets entirely the wrong tone when we’re seeking empowerment and the truism “fewer, better rules, thoughtfully enforced” will be undermined.

COVID Rules & Conclusion

In short, risk management is never at its most effective when thinking stops. For example, in 2020 nearly all of us were in a situation where we were seated in a large café / shopping in a supermarket with two wide doors at either end of the space. Though seated/ paying at a till right next to a deserted ‘in’ door we’re instructed to make our way past dozens of fellow dinners / shoppers to the official ‘out’ door so as to ‘benefit’ from the one-way system. (All it would take is to instruct the person ‘guarding’ the door to enforce the one-way system when the ‘in’ door is genuinely busy and not shout ‘ONE WAY!’ by wrote when it’s deserted. (Especially when using a tone that implies ‘murderer’).

The most obvious learning point from an understanding of the Cynefin model is that the world is not a simple place. Applying simplistic thinking to any risk management issue – let alone complex and global one – kills people unnecessarily.

Read more from Tim in his monthly SHP blog series…

The Safety Conversation Podcast: Listen now!

The Safety Conversation with SHP (previously the Safety and Health Podcast) aims to bring you the latest news, insights and legislation updates in the form of interviews, discussions and panel debates from leading figures within the profession.

Find us on Apple Podcasts, Spotify and Google Podcasts, subscribe and join the conversation today!

Thanks Tim for making another connection between safety and the many leadership and organisation development models/tools that are typically used to help leaders become more effective decision-makers or provide insights into organisational culture etc. Cynefin and its variants/earlier manifestations (dating back to the late 1950’s!) have shaped leadership thinking over the years; however by showing the importance of using this to influence people’s thinking and approach at all levels I believe you have highlighted its value in relation to safety culture and of course resilience (engineering).

Thank you Kevin. As wellbeing (and mental health) increasingly merge with safety and we move more and more to holistic and interconnected models of error management I’m finding I’m saying “excellence is just excellence” … well, more and more!