Robot safety part 5: pattern recognition

John Kersey delves into some of the mysteries that aid machines in spotting patterns in our working lives.

Pattern recognition: A pattern is a particular configuration of data; for example ‘A’ is a composition of three strokes. Pattern recognition is the detection of such patterns.

Machine Learning – Ethem Alpaydin – MIT Press

Sitting on a Florida beach way back in 1948, Norman Woodland was mentally grappling with a problem. Together with his colleague Bernard Silver, they were working on a system to classify product information at supermarket checkouts. The college he taught at rejected the proposal but they thought a viable system could be found. Norman idly scratched the dots and dashes of the Morse code (a radio signalling system) in the sand and an impulse led him to extend the dots and dashes downwards to form a pattern of variable width lines. Thus was the UPC (Universal Product Code) and the familiar barcode was born – still used today at checkouts.

Here is an early form of pattern recognition but it needed a further component – a scanner or reader to make it work. A safety application of the barcode is to label assets such as electrical equipment that has been portable appliance tested. Besides these basic forms of pattern data can take various forms not only numerical or as text but also graphical. So too computers can recognise patterns and hence objects in a variety of ways.

Simple ways of recognition

The simplest way is by template matching recognition where the object is classified against a literal or close match so it is recognised as a definite object as represented by the barcode. However the task may not always be so simple and so other means have to be used to classify the object. Using machine learning techniques, the aim is to create a training set of known data such as images which are used to classify the unknown object into the class that best meets the criteria. An example here is the Captcha security system where various unclear images are presented to the user and they have to determine which ones are “road” to prove it is a human wishing to enter the system (although by October 2017 advances in machine learning had rendered the current system open to exploitation).

At one time it was conventional wisdom that pattern recognition was one task where humans may have an advantage as humans can make judgements or inferences from incomplete information. New methods of pattern recognition, more powerful programmes and processors have levelled the playing field.

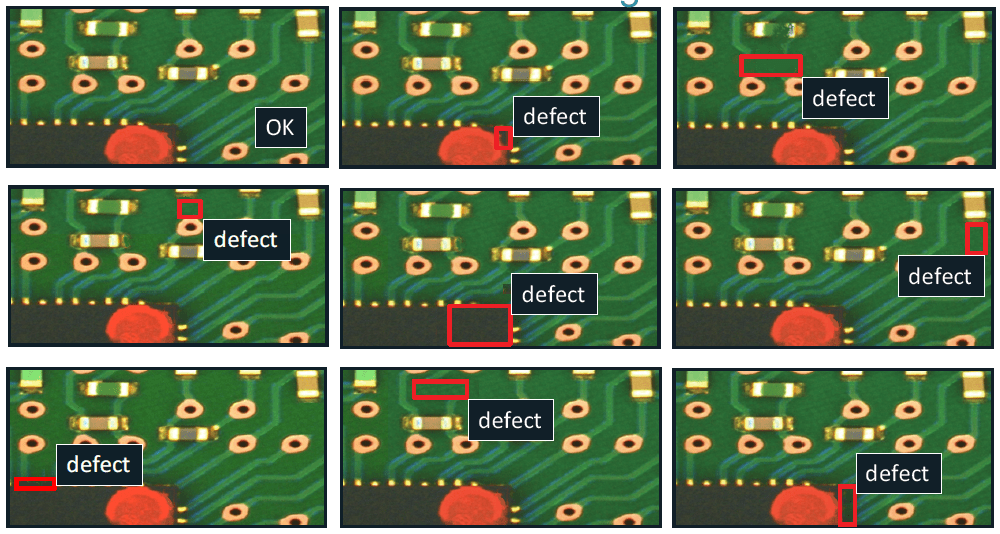

The variability of the image type and possible classes will determine the size of the training set used (in the case of images how many images will be needed). Such systems working on images are often called vision systems and are now widely employed in visual inspection duties in manufacturing applications. Advances have meant that the training set can be smaller, the process is quicker due to the increased power of both the software and hardware available and accuracy increased. The vision system will have the ability to diagnose its own accuracy and confidence levels of 98%+ are not uncommon (trained expert human inspectors for tasks such as fault finding are rated at about 95%). A safety application being explored for vision systems is identifying individuals wearing or not wearing safety workwear generally or limiting access to hazardous areas only if they are wearing the correct protective equipment fit. Exception reporting would be the obvious strategy here, identifying individuals that do not meet the safety criteria.

Seeing the trees in the forest

In a similar way other forms of pattern such as sorting incidents into classes such as pre-defined root causes can be done using decision trees. This is familiar process for safety practitioners as fault tree analysis is a form of decision tree. This is further aided by use of algorithms such as the random forest series which will identify the most applicable cause from the sample. Cognitive systems such as IBM Watson make this even more relevant and we have seen this used in the Ava chatbot system developed by Airsweb which converts speech into text and then can classify the incident.

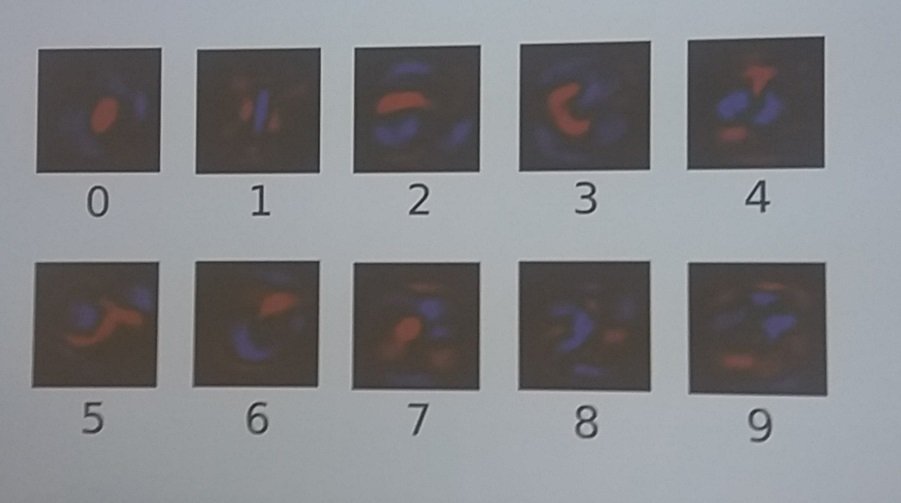

Computers copying human thought patterns

Most modern practice centres on the use of artificial neural networks that replicate the ways that human brains think. In a similar way to human neurons that fire when a certain level of stimulation is reached so too do artificial neurons. The exception is that they are guided by quantitative values such that when a certain threshold value is reached they will excite a neighbouring node and in a given direction. A common array is a perceptron where the neurons are arranged in layers such that when the excitation level is reached it will excite neurons in a further layer. In this way the machine will build up a richer perception of the object which aids accuracy in putting this in the correct class.

A further development is the use of machine learning and deep learning modes. An example offered by Sanna Randelius (IBM vision specialist) for vision systems sees machine learning stages as:

- Input (sample image)

- Feature extraction (what makes that image different?)

- Classification (which class does the feature typify)

- Output (class type eg car, not car)

Whereas deep learning would be:

- Input (sample image)

- Feature extraction plus Classification

- Output

Thus the process is speeded up. As noted by Sanna every ImageNet classification winner since 2012 has used deep learning neural networks such that they have become the pattern recognition system for vision systems. It wasn’t until 2013 that vision systems could approach the human performance zone for classification (between 5 and 10%), by 2015 they had exceeded this.

When vision computer systems classify objects they will then label the image detailing the type, for example “defect”.

The implications for safety

Safety is often about spotting things that are wrong – hazards, near misses, accidents and so on. If you are new school and doing Safety Differently then it’s more about spotting things that are right all the time. Either way pattern recognition works.

Disclaimer. The views expressed in this article are those of the author and do not necessarily represent those of any commercial, academic or professional institution I am associated with.

Robot safety part 5: pattern recognition

John Kersey delves into some of the mysteries that aid machines in spotting patterns in our working lives. Pattern recognition:

John Kersey

SHP - Health and Safety News, Legislation, PPE, CPD and Resources Related Topics

Drug and alcohol testing in a UK airport environment

Unleashing the power of emerging technologies in EHS

Passing the baton – Meet the 2024 IOSH President